Table of Contents

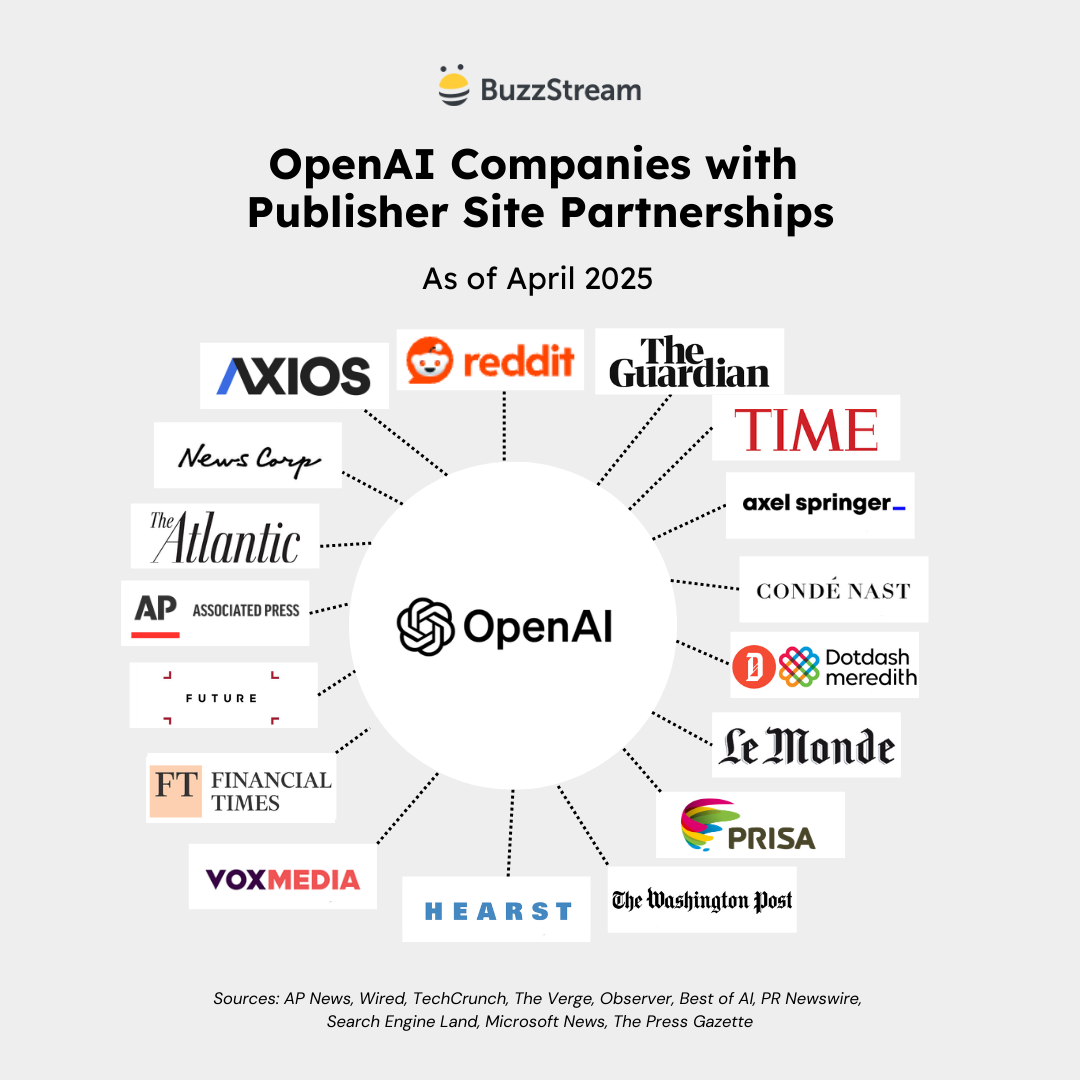

April 23, 2025 Update: I’ve updated this post to reflect all publisher partnerships with AI companies as of April 23, 2025. OpenAI and Microsoft have been busy.

- LLMs used by OpenAI and Google are heavily curated datasets.

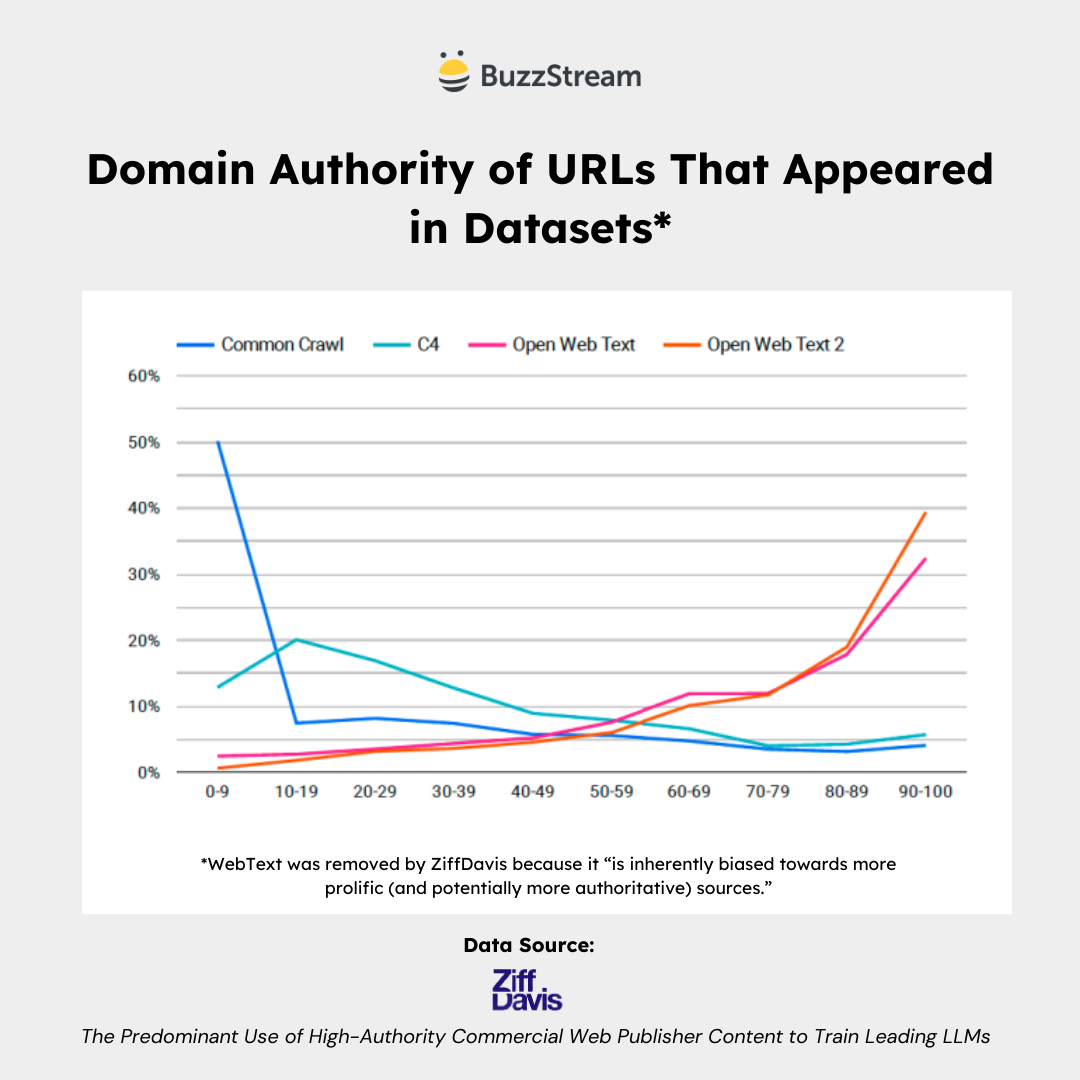

- Ziff Davis’ study shows that heavily curated datasets contain a higher proportion of high-DA websites.

- WebText (used to train OpenAI’s GPT-2) contains high-DA websites.

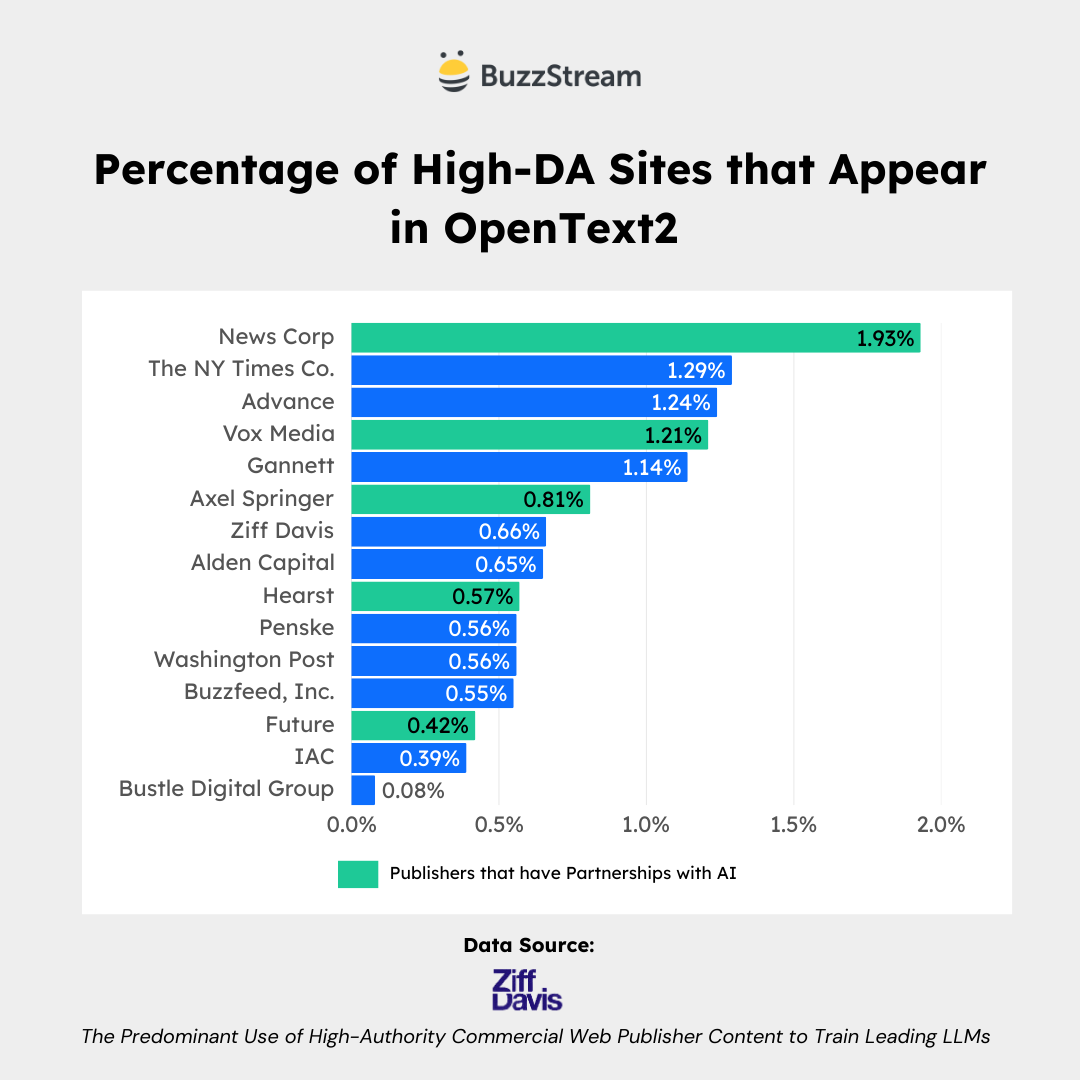

- OpenWebText 2 (expanded version modeled after WebText) also contains high-DA websites.

- AI companies have many partnerships with these high-DA publishers.

Getting featured on AI results like ChatGPT is still a mystery.

I have been hypothesizing about how employing more digital PR will increase the likelihood of getting your brand mentioned in an LLM (see link building in 2025), but I have yet to find a compelling study to back it up.

Until now.

A new study by researchers at Ziff Davis shows that LLMs may prioritize data from authoritative, high-DA (Domain Authority) publishers.

Taking it a step further, we may be able to tell what publishers these LLMs prefer based on the partnerships they’ve formed.

Here is what the current landscape looks like today:

If the above is confusing, follow along with me as I help to connect the dots.

If you’re a LLM newb like me, you might want to read this next primer section; if not, skip to the main findings section.

LLMs for Dummies (like me!)

Large Language Models are designed to generate, predict, and interpret your text. Some common LLMs you might have heard of are OpenAI’s GPT series, Google’s LaMDA, and Meta’s LLaMA.

They get trained from enormous datasets like text from the internet, books, and others.

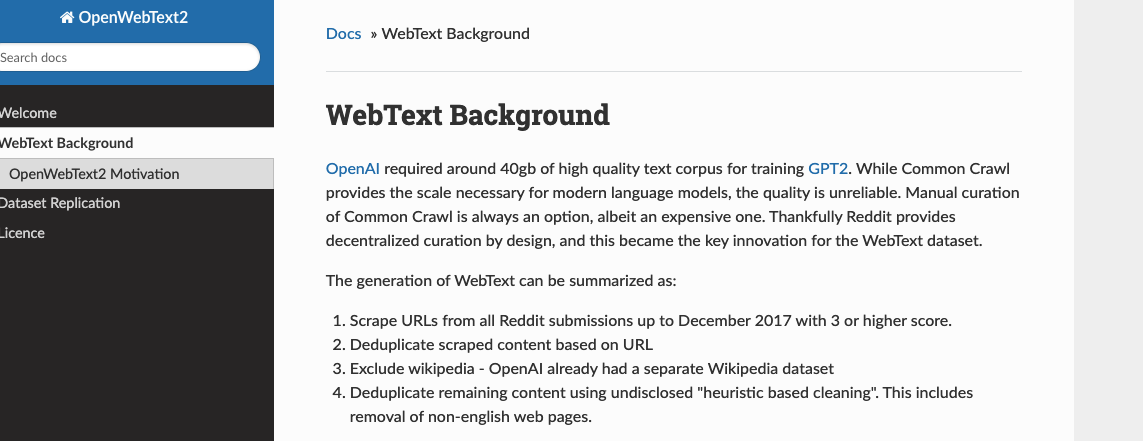

For instance, one of the datasets used, OpenWebText2, is curated by scraping URLs linked on Reddit posts with a three or higher score.

So, a URL like this one from Yahoo would show up based on the high “score”:

As I said in the intro, LLMs don’t work like search engines, nor do they “know” things like you’re led to believe in the movies.

They get trained to predict text by analyzing patterns in these massive datasets.

What Datasets Do LLMs Use?

We don’t know exactly what datasets LLMs use. However, there is some history to lean on, which we can use to infer their current methods.

For example, OpenAI openly disclosed the dataset they used to train GPT-2 (we are on GPT-4 now).

We can also point to relationships with publishers regarding where they are getting the data used to train their datasets.

Here are two other things to know:

Most Datasets Get Curated

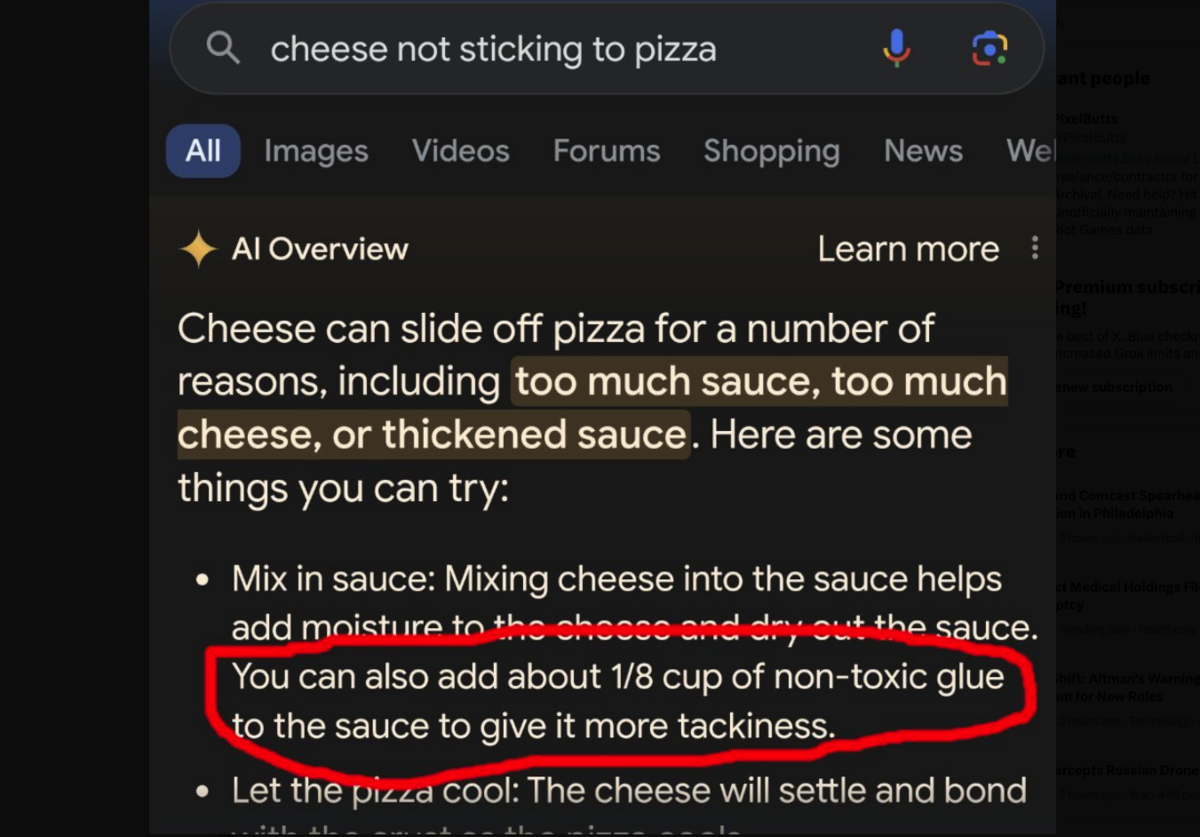

You saw what happened when Google’s initial launch of AI Overviews relied too heavily on Reddit:

So, because some of the data gets pulled from places like Reddit, the datasets need curation to ensure better, higher-quality results.

Raw datasets like Common Crawl have little to no curation.

Heavily-curated datasets, such as OpenWebText or C4, filter out low-quality content and prioritize high-authority, relevant, and clean text.

The extent of curation varies widely among datasets.

Most LLMs Get Trained on a Mix of Datasets

To ensure quality and depth, LLMs use a mix of data.

As mentioned, we don’t know precisely what datasets each technology uses—especially from an ever-evolving field like AI.

As the Ziff Davis study says, “Major LLM developers no longer disclose their training data as they once did.”

Here’s a snapshot of what we know:

| Dataset | Source | Curation Level | Accessibility | Usage Examples |

|---|---|---|---|---|

| WebText | Reddit links (≥3 upvotes) | High (human-curated) | Proprietary (OpenAI) | GPT-2, GPT-3 training indirectly |

| OpenWebText | Reddit links (replicated method) | Moderate | Open-source | Research, proxy for WebText |

| OpenWebText2 | Reddit links (longer timeframe) | Moderate | Open-source | Proxy for GPT-3’s WebText2 dataset |

| C4 | Cleaned Common Crawl data | Moderate | Open-source | Google T5, LaMDA |

| Common Crawl | General web crawl | Minimal | Open-source | Broad AI training, including GPT-3 |

OK, next, let’s look at what Ziff Davis showed us.

What Did The Ziff Davis Study Show Us?

Ziff Davis, the publishing company behind sites like IGN.com and Mashable.com (and Moz.com), cross-referenced a list of publishers with the URLs that appear in LLM training datasets.

The sets are:

- Common Crawl

- C4

- OpenWebText

- OpenWebText2

- WebText

Their findings are below:

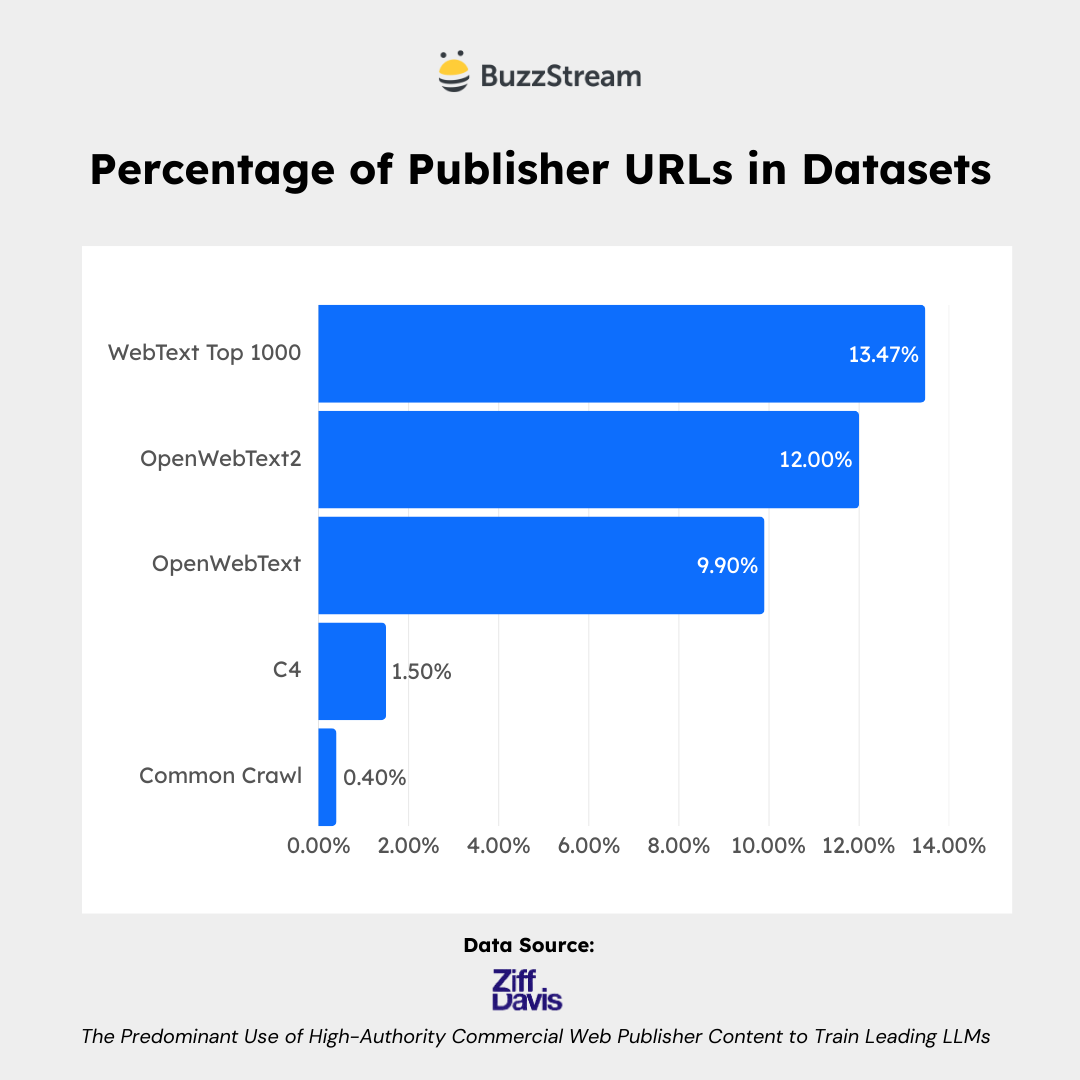

There is a High Percentage of Publisher URLs in Datasets

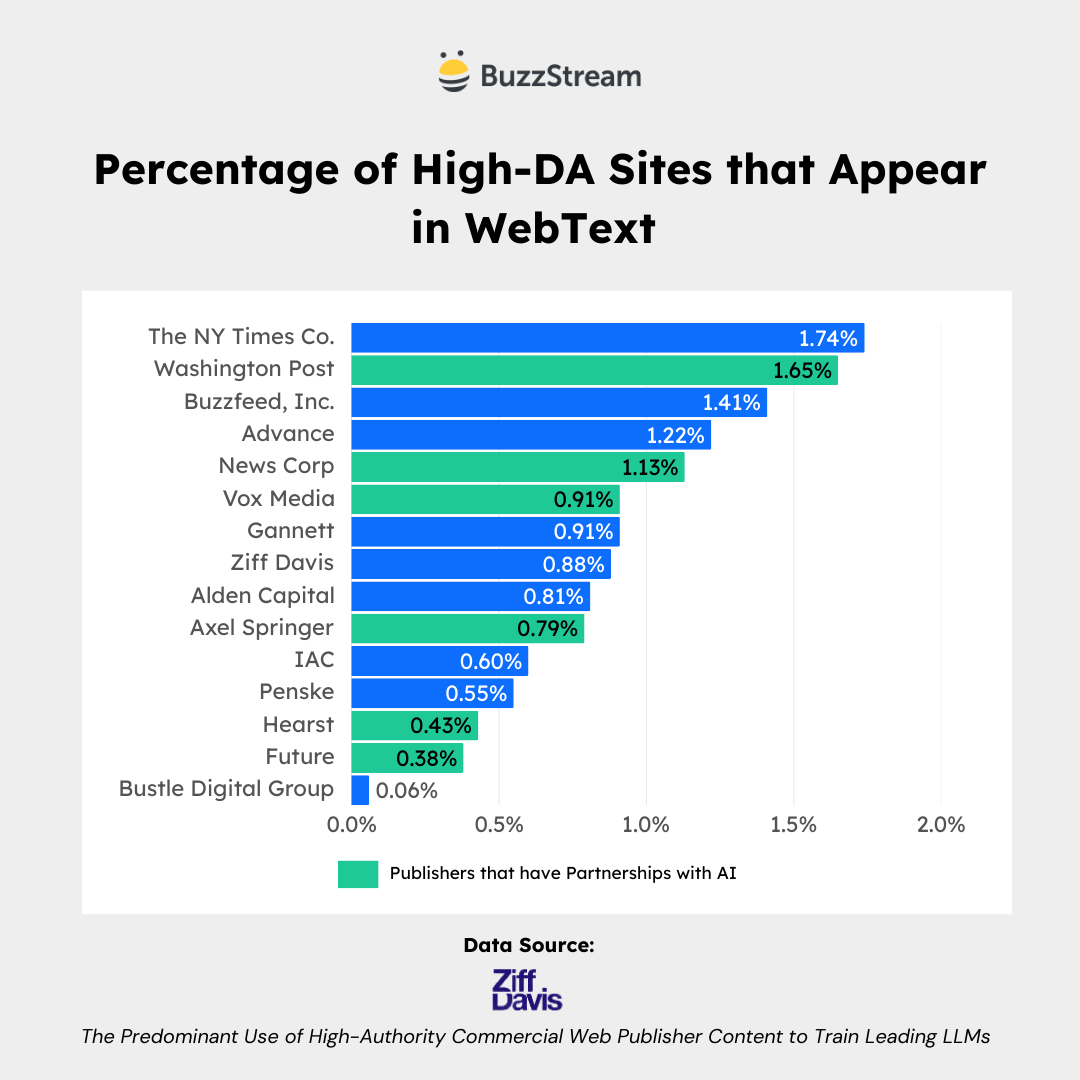

As you can see, there is a much higher concentration of publisher URLs in the highly-curated WebText and OpenWebText2 datasets.

Then, they looked at the Domain Authority (DA) of these URLs to gauge the usage of high DA sites in these datasets:

There is a High Concentration of High DA URLs in Curated Datasets

The highly curated sets of OpenWebText and OpenWebText 2 contain higher amounts of high DA URLs.

The list of URLs that Ziff Davis used, alongside their DA, can be found in the table below:

| URL | Publisher Network | Domain Authority |

|---|---|---|

| aenetworks.com | A+E Networks | 60 |

| aetv.com | A+E Networks | 70 |

| mylifetime.com | A+E Networks | 76 |

| history.com | A+E Networks | 92 |

| pennstudios.media | Advance (Condé Nast, Advance Local) | 12 |

| alabamaeducationlab.org | Advance (Condé Nast, Advance Local) | 22 |

| peopleofalabama.com | Advance (Condé Nast, Advance Local) | 22 |

| nyup.com | Advance (Condé Nast, Advance Local) | 32 |

| jerseysbest.com | Advance (Condé Nast, Advance Local) | 34 |

| worldofinteriors.com | Advance (Condé Nast, Advance Local) | 39 |

| wearepop.com | Advance (Condé Nast, Advance Local) | 40 |

| acbj.com | Advance (Condé Nast, Advance Local) | 41 |

| thisisalabama.org | Advance (Condé Nast, Advance Local) | 41 |

| hereisoregon.com | Advance (Condé Nast, Advance Local) | 42 |

| bizequity.com | Advance (Condé Nast, Advance Local) | 43 |

| lonestarlive.com | Advance (Condé Nast, Advance Local) | 46 |

| reckon.news | Advance (Condé Nast, Advance Local) | 50 |

| lacucinaitaliana.com | Advance (Condé Nast, Advance Local) | 54 |

| leadersinsport.com | Advance (Condé Nast, Advance Local) | 54 |

| johansens.com | Advance (Condé Nast, Advance Local) | 58 |

| gulflive.com | Advance (Condé Nast, Advance Local) | 63 |

| gourmet.com | Advance (Condé Nast, Advance Local) | 64 |

| houseandgarden.co.uk | Advance (Condé Nast, Advance Local) | 67 |

| ironman.com | Advance (Condé Nast, Advance Local) | 70 |

| newyorkupstate.com | Advance (Condé Nast, Advance Local) | 70 |

| cntraveller.com | Advance (Condé Nast, Advance Local) | 75 |

| hemmings.com | Advance (Condé Nast, Advance Local) | 75 |

| newzoo.com | Advance (Condé Nast, Advance Local) | 75 |

| voguebusiness.com | Advance (Condé Nast, Advance Local) | 75 |

| tatler.com | Advance (Condé Nast, Advance Local) | 76 |

| condenast.com | Advance (Condé Nast, Advance Local) | 77 |

| turnitin.com | Advance (Condé Nast, Advance Local) | 77 |

| them.us | Advance (Condé Nast, Advance Local) | 79 |

| silive.com | Advance (Condé Nast, Advance Local) | 80 |

| syracuse.com | Advance (Condé Nast, Advance Local) | 80 |

| glamourmagazine.co.uk | Advance (Condé Nast, Advance Local) | 81 |

| sportsbusinessjournal.com | Advance (Condé Nast, Advance Local) | 81 |

| lehighvalleylive.com | Advance (Condé Nast, Advance Local) | 82 |

| architecturaldigest.com | Advance (Condé Nast, Advance Local) | 86 |

| gq-magazine.co.uk | Advance (Condé Nast, Advance Local) | 86 |

| masslive.com | Advance (Condé Nast, Advance Local) | 86 |

| bonappetit.com | Advance (Condé Nast, Advance Local) | 87 |

| epicurious.com | Advance (Condé Nast, Advance Local) | 87 |

| vogue.co.uk | Advance (Condé Nast, Advance Local) | 87 |

| cleveland.com | Advance (Condé Nast, Advance Local) | 88 |

| cntraveler.com | Advance (Condé Nast, Advance Local) | 88 |

| oregonlive.com | Advance (Condé Nast, Advance Local) | 88 |

| pennlive.com | Advance (Condé Nast, Advance Local) | 88 |

| allure.com | Advance (Condé Nast, Advance Local) | 89 |

| mlive.com | Advance (Condé Nast, Advance Local) | 89 |

| nj.com | Advance (Condé Nast, Advance Local) | 89 |

| pitchfork.com | Advance (Condé Nast, Advance Local) | 89 |

| teenvogue.com | Advance (Condé Nast, Advance Local) | 89 |

| vogue.com | Advance (Condé Nast, Advance Local) | 89 |

| al.com | Advance (Condé Nast, Advance Local) | 90 |

| glamour.com | Advance (Condé Nast, Advance Local) | 90 |

| gq.com | Advance (Condé Nast, Advance Local) | 90 |

| self.com | Advance (Condé Nast, Advance Local) | 90 |

| bizjournals.com | Advance (Condé Nast, Advance Local) | 91 |

| arstechnica.com | Advance (Condé Nast, Advance Local) | 92 |

| vanityfair.com | Advance (Condé Nast, Advance Local) | 92 |

| newyorker.com | Advance (Condé Nast, Advance Local) | 93 |

| wired.com | Advance (Condé Nast, Advance Local) | 93 |

| coloradohometownweekly.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 43 |

| raisedintherockies.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 18 |

| virginiamedia.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 30 |

| mytowncolorado.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 31 |

| remindernews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 32 |

| napervillemagazine.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 41 |

| nashobavalleyvoice.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 41 |

| bocopreps.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 44 |

| advocate-news.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 46 |

| altdaily.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 48 |

| lamarledger.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 49 |

| willitsnews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 53 |

| fortmorgantimes.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 54 |

| broomfieldenterprise.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 55 |

| buffzone.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 55 |

| journal-advocate.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 55 |

| militarynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 56 |

| paradisepost.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 56 |

| record-bee.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 56 |

| ukiahdailyjournal.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 56 |

| canoncitydailyrecord.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 57 |

| redbluffdailynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 57 |

| standardspeaker.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 58 |

| republicanherald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 59 |

| dailytribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 60 |

| dailydemocrat.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 61 |

| orovillemr.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 61 |

| pressenterprise.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 61 |

| themorningsun.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 61 |

| timesherald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 61 |

| morningjournal.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 62 |

| pottsmerc.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 62 |

| dailylocal.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 63 |

| medianewsgroup.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 63 |

| metromix.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 63 |

| troyrecord.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 63 |

| citizensvoice.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 64 |

| greeleytribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 64 |

| reporterherald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 64 |

| saratogian.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 64 |

| thereporteronline.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 65 |

| trentonian.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 65 |

| dailyfreeman.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 66 |

| macombdaily.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 66 |

| marinij.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 66 |

| times-standard.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 66 |

| chicoer.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 67 |

| news-herald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 67 |

| thereporter.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 67 |

| timesheraldonline.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 67 |

| redlandsdailyfacts.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 68 |

| tribpub.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 68 |

| tribunecontentagency.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 68 |

| whittierdailynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 68 |

| sentinelandenterprise.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 69 |

| theoaklandpress.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 69 |

| thetimes-tribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 69 |

| timescall.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 70 |

| montereyherald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 71 |

| delcotimes.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 72 |

| dailybulletin.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 74 |

| dailycamera.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 74 |

| lowellsun.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 74 |

| sgvtribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 74 |

| dailybreeze.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 75 |

| readingeagle.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 75 |

| pasadenastarnews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 76 |

| chicagomag.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 78 |

| dailypress.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 78 |

| pilotonline.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 78 |

| santacruzsentinel.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 78 |

| eastbaytimes.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 79 |

| sbsun.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 79 |

| presstelegram.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 80 |

| dailynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 82 |

| mcall.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 83 |

| bostonherald.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 85 |

| courant.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 85 |

| sandiegouniontribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 85 |

| twincities.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 86 |

| denverpost.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 88 |

| orlandosentinel.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 88 |

| sun-sentinel.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 88 |

| ocregister.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 89 |

| mercurynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 91 |

| chicagotribune.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 92 |

| nydailynews.com | Alden Global Capital (Tribune Publishing, MediaNews Group) | 93 |

| bz.de | AxelSpringer | 20 |

| blau-magazin.de | AxelSpringer | 24 |

| shopalike.fi | AxelSpringer | 25 |

| shopalike.se | AxelSpringer | 25 |

| saongroup.com | AxelSpringer | 26 |

| shopalike.dk | AxelSpringer | 27 |

| axelspringer-syndication.de | AxelSpringer | 29 |

| schweizerbank.ch | AxelSpringer | 30 |

| shopalike.sk | AxelSpringer | 31 |

| playpc.pl | AxelSpringer | 33 |

| casamundo.com | AxelSpringer | 34 |

| belvilla.com | AxelSpringer | 35 |

| shopalike.nl | AxelSpringer | 38 |

| hy.co | AxelSpringer | 39 |

| pme.ch | AxelSpringer | 39 |

| axel-springer-akademie.de | AxelSpringer | 40 |

| bonial.com | AxelSpringer | 41 |

| insider-inc.com | AxelSpringer | 41 |

| housell.com | AxelSpringer | 42 |

| stepstone.at | AxelSpringer | 43 |

| students.ch | AxelSpringer | 43 |

| usgang.ch | AxelSpringer | 43 |

| wams.de | AxelSpringer | 44 |

| autobazar.eu | AxelSpringer | 45 |

| stepstone.nl | AxelSpringer | 45 |

| fitbook.de | AxelSpringer | 46 |

| nin.co.rs | AxelSpringer | 46 |

| homeday.de | AxelSpringer | 47 |

| mediaimpact.de | AxelSpringer | 47 |

| pnet.co.za | AxelSpringer | 47 |

| shopalike.es | AxelSpringer | 47 |

| stepstone.fr | AxelSpringer | 48 |

| autohaus24.de | AxelSpringer | 49 |

| ffn.de | AxelSpringer | 49 |

| finanzen.ch | AxelSpringer | 49 |

| geo.ru | AxelSpringer | 49 |

| careerjunction.co.za | AxelSpringer | 50 |

| ein-herz-fuer-kinder.de | AxelSpringer | 50 |

| stepstone.be | AxelSpringer | 50 |

| shopalike.fr | AxelSpringer | 51 |

| upday.com | AxelSpringer | 51 |

| candidatemanager.net | AxelSpringer | 52 |

| ikiosk.de | AxelSpringer | 52 |

| opineo.pl | AxelSpringer | 52 |

| jobs.ie | AxelSpringer | 53 |

| auto-bild.ro | AxelSpringer | 54 |

| bams.de | AxelSpringer | 54 |

| ladenzeile.de | AxelSpringer | 54 |

| profession.hu | AxelSpringer | 54 |

| techbook.de | AxelSpringer | 54 |

| topgear.es | AxelSpringer | 55 |

| bonial.fr | AxelSpringer | 56 |

| good.co | AxelSpringer | 56 |

| irishjobs.ie | AxelSpringer | 56 |

| onet.pl | AxelSpringer | 56 |

| carwale.com | AxelSpringer | 57 |

| jakdojade.pl | AxelSpringer | 57 |

| ofeminin.pl | AxelSpringer | 57 |

| radiohamburg.de | AxelSpringer | 57 |

| sportauto.fr | AxelSpringer | 57 |

| stepstone.com | AxelSpringer | 57 |

| auto-swiat.pl | AxelSpringer | 58 |

| beobachter.ch | AxelSpringer | 58 |

| idealo.co.uk | AxelSpringer | 58 |

| kaufda.de | AxelSpringer | 58 |

| noizz.pl | AxelSpringer | 58 |

| logic-immo.com | AxelSpringer | 59 |

| ffh.de | AxelSpringer | 60 |

| finanz.ru | AxelSpringer | 60 |

| medonet.pl | AxelSpringer | 60 |

| travelbook.de | AxelSpringer | 60 |

| azet.sk | AxelSpringer | 61 |

| metal-hammer.de | AxelSpringer | 61 |

| pclab.pl | AxelSpringer | 61 |

| periodismodelmotor.com | AxelSpringer | 61 |

| antenne.de | AxelSpringer | 62 |

| axelspringer.de | AxelSpringer | 62 |

| europeanvoice.com | AxelSpringer | 62 |

| immonet.de | AxelSpringer | 62 |

| meilleursagents.com | AxelSpringer | 62 |

| musikexpress.de | AxelSpringer | 62 |

| plejada.pl | AxelSpringer | 62 |

| vod.pl | AxelSpringer | 62 |

| zumi.pl | AxelSpringer | 62 |

| gofeminin.de | AxelSpringer | 63 |

| jobsite.co.uk | AxelSpringer | 64 |

| rollingstone.de | AxelSpringer | 64 |

| autojournal.fr | AxelSpringer | 65 |

| immowelt.de | AxelSpringer | 65 |

| stepstone.de | AxelSpringer | 65 |

| handelszeitung.ch | AxelSpringer | 66 |

| idealo.fr | AxelSpringer | 66 |

| lonny.com | AxelSpringer | 66 |

| n24.de | AxelSpringer | 66 |

| axelspringer.com | AxelSpringer | 67 |

| blikk.hu | AxelSpringer | 67 |

| przegladsportowy.pl | AxelSpringer | 67 |

| seloger.com | AxelSpringer | 69 |

| totaljobs.com | AxelSpringer | 69 |

| zumper.com | AxelSpringer | 69 |

| insiderintelligence.com | AxelSpringer | 70 |

| blic.rs | AxelSpringer | 71 |

| forbes.pl | AxelSpringer | 71 |

| newsweek.pl | AxelSpringer | 71 |

| finanzen.net | AxelSpringer | 72 |

| idealo.de | AxelSpringer | 72 |

| bz-berlin.de | AxelSpringer | 73 |

| stylebistro.com | AxelSpringer | 73 |

| autobild.de | AxelSpringer | 74 |

| gruenderszene.de | AxelSpringer | 74 |

| protocol.com | AxelSpringer | 74 |

| autoplus.fr | AxelSpringer | 75 |

| autoweek.nl | AxelSpringer | 75 |

| businessinsider.com.pl | AxelSpringer | 75 |

| fakt.pl | AxelSpringer | 75 |

| transfermarkt.de | AxelSpringer | 75 |

| komputerswiat.pl | AxelSpringer | 77 |

| skapiec.pl | AxelSpringer | 77 |

| auto.cz | AxelSpringer | 79 |

| autobild.es | AxelSpringer | 79 |

| businessinsider.nl | AxelSpringer | 80 |

| forbes.ru | AxelSpringer | 80 |

| computerbild.de | AxelSpringer | 81 |

| aufeminin.com | AxelSpringer | 82 |

| businessinsider.jp | AxelSpringer | 82 |

| ozy.com | AxelSpringer | 82 |

| awin.com | AxelSpringer | 83 |

| emarketer.com | AxelSpringer | 87 |

| businessinsider.com.au | AxelSpringer | 88 |

| autoexpress.co.uk | AxelSpringer | 89 |

| zimbio.com | AxelSpringer | 89 |

| businessinsider.de | AxelSpringer | 90 |

| businessinsider.in | AxelSpringer | 90 |

| shareasale.com | AxelSpringer | 90 |

| hobbyconsolas.com | AxelSpringer | 91 |

| politico.eu | AxelSpringer | 91 |

| bild.de | AxelSpringer | 92 |

| businessinsider.es | AxelSpringer | 92 |

| politico.com | AxelSpringer | 92 |

| welt.de | AxelSpringer | 92 |

| computerhoy.com | AxelSpringer | 93 |

| insider.com | AxelSpringer | 93 |

| businessinsider.com | AxelSpringer | 94 |

| bdg.com | Bustle Digital Group | 46 |

| romper.com | Bustle Digital Group | 75 |

| thezoereport.com | Bustle Digital Group | 76 |

| nylon.com | Bustle Digital Group | 77 |

| scarymommy.com | Bustle Digital Group | 78 |

| fatherly.com | Bustle Digital Group | 81 |

| wmagazine.com | Bustle Digital Group | 84 |

| inverse.com | Bustle Digital Group | 85 |

| mic.com | Bustle Digital Group | 85 |

| elitedaily.com | Bustle Digital Group | 89 |

| bustle.com | Bustle Digital Group | 92 |

| tasty.co | Buzzfeed, Inc. | 74 |

| firstwefeast.com | Buzzfeed, Inc. | 77 |

| buzzfeednews.com | Buzzfeed, Inc. | 86 |

| huffingtonpost.co.uk | Buzzfeed, Inc. | 91 |

| buzzfeed.com | Buzzfeed, Inc. | 93 |

| huffingtonpost.com | Buzzfeed, Inc. | 94 |

| huffpost.com | Buzzfeed, Inc. | 94 |

| cnbc.com | Comcast NBCUniversal | 93 |

| telemundoareadelabahia.com | Comcast NBCUniversal | 54 |

| telemundonuevainglaterra.com | Comcast NBCUniversal | 46 |

| universalproductsexperiences.com | Comcast NBCUniversal | 44 |

| universalstudioshollywood.com | Comcast NBCUniversal | 68 |

| nbcuproductions.com | Comcast NBCUniversal | 12 |

| nbcsportsnext.com | Comcast NBCUniversal | 37 |

| illumination.com | Comcast NBCUniversal | 39 |

| telemundofresno.com | Comcast NBCUniversal | 42 |

| universalbeijingresort.com | Comcast NBCUniversal | 43 |

| telemundo48elpaso.com | Comcast NBCUniversal | 44 |

| telemundoutah.com | Comcast NBCUniversal | 45 |

| telemundo33.com | Comcast NBCUniversal | 46 |

| telemundo49.com | Comcast NBCUniversal | 46 |

| telemundosanantonio.com | Comcast NBCUniversal | 46 |

| telemundo31.com | Comcast NBCUniversal | 48 |

| telemundo40.com | Comcast NBCUniversal | 50 |

| telemundo62.com | Comcast NBCUniversal | 50 |

| telemundoarizona.com | Comcast NBCUniversal | 52 |

| telemundodenver.com | Comcast NBCUniversal | 53 |

| telemundolasvegas.com | Comcast NBCUniversal | 53 |

| telemundowashingtondc.com | Comcast NBCUniversal | 54 |

| universalpictures.co.uk | Comcast NBCUniversal | 56 |

| hayu.com | Comcast NBCUniversal | 57 |

| telemundo20.com | Comcast NBCUniversal | 57 |

| telemundodallas.com | Comcast NBCUniversal | 57 |

| telemundohouston.com | Comcast NBCUniversal | 58 |

| golfnow.com | Comcast NBCUniversal | 59 |

| sportsengine.com | Comcast NBCUniversal | 59 |

| telemundochicago.com | Comcast NBCUniversal | 59 |

| universalkids.com | Comcast NBCUniversal | 61 |

| telemundo51.com | Comcast NBCUniversal | 63 |

| rwsentosa.com | Comcast NBCUniversal | 65 |

| telemundo52.com | Comcast NBCUniversal | 67 |

| telemundo47.com | Comcast NBCUniversal | 68 |

| focusfeatures.com | Comcast NBCUniversal | 73 |

| sny.tv | Comcast NBCUniversal | 74 |

| usj.co.jp | Comcast NBCUniversal | 74 |

| dreamworks.com | Comcast NBCUniversal | 75 |

| telemundopr.com | Comcast NBCUniversal | 75 |

| peacocktv.com | Comcast NBCUniversal | 77 |

| nbcboston.com | Comcast NBCUniversal | 78 |

| nbcsportsphiladelphia.com | Comcast NBCUniversal | 79 |

| nbcmiami.com | Comcast NBCUniversal | 80 |

| nbcsportsbayarea.com | Comcast NBCUniversal | 80 |

| oxygen.com | Comcast NBCUniversal | 80 |

| universalorlando.com | Comcast NBCUniversal | 80 |

| universalpictures.com | Comcast NBCUniversal | 80 |

| nbcconnecticut.com | Comcast NBCUniversal | 81 |

| nbcolympics.com | Comcast NBCUniversal | 81 |

| usanetwork.com | Comcast NBCUniversal | 81 |

| nbcbayarea.com | Comcast NBCUniversal | 82 |

| nbcsandiego.com | Comcast NBCUniversal | 82 |

| telemundo.com | Comcast NBCUniversal | 83 |

| nbcchicago.com | Comcast NBCUniversal | 84 |

| nbcdfw.com | Comcast NBCUniversal | 84 |

| nbclosangeles.com | Comcast NBCUniversal | 84 |

| nbcphiladelphia.com | Comcast NBCUniversal | 84 |

| nbcwashington.com | Comcast NBCUniversal | 84 |

| uphe.com | Comcast NBCUniversal | 84 |

| bravotv.com | Comcast NBCUniversal | 85 |

| nbcnewyork.com | Comcast NBCUniversal | 88 |

| syfy.com | Comcast NBCUniversal | 88 |

| fandango.com | Comcast NBCUniversal | 89 |

| msnbc.com | Comcast NBCUniversal | 89 |

| nbc.com | Comcast NBCUniversal | 90 |

| nbcsports.com | Comcast NBCUniversal | 90 |

| eonline.com | Comcast NBCUniversal | 92 |

| rottentomatoes.com | Comcast NBCUniversal | 92 |

| cnbc.com | Comcast NBCUniversal | 93 |

| nbcnews.com | Comcast NBCUniversal | 93 |

| sciencenature.theweekjunior.co.uk | Future plc | 41 |

| technologyleadershipsummit.com | Future plc | 24 |

| goldenjoystickawards.com | Future plc | 3 |

| livingetcevents.com | Future plc | 9 |

| musicweektechsummit.com | Future plc | 12 |

| avnetworknation.com | Future plc | 14 |

| techradarchoiceawards.com | Future plc | 16 |

| mw-womeninmusic.com | Future plc | 19 |

| mcnwonderwomen.com | Future plc | 21 |

| falltvevents.com | Future plc | 22 |

| moderndad.com | Future plc | 22 |

| subscribe.techmags.com.au | Future plc | 24 |

| brandimpactawards.com | Future plc | 26 |

| mixsoundforfilm.com | Future plc | 26 |

| womansweekly.co.uk | Future plc | 29 |

| vertexconf.com | Future plc | 31 |

| pricepanda.com | Future plc | 32 |

| bchalloffame.com | Future plc | 33 |

| mrmobile.tech | Future plc | 33 |

| mobileindustryawards.com | Future plc | 38 |

| 5gradar.com | Future plc | 40 |

| bikeperfect.com | Future plc | 40 |

| plotfinder.net | Future plc | 40 |

| theweekjunior.co.uk | Future plc | 41 |

| practicalmotorhome.com | Future plc | 42 |

| futureevents.uk | Future plc | 43 |

| svconline.com | Future plc | 45 |

| thrifter.com | Future plc | 45 |

| total911.com | Future plc | 45 |

| actualtechmedia.com | Future plc | 46 |

| petsradar.com | Future plc | 46 |

| practicalcaravan.com | Future plc | 46 |

| fitandwell.com | Future plc | 47 |

| pbo.co.uk | Future plc | 47 |

| residentialsystems.com | Future plc | 47 |

| thefield.co.uk | Future plc | 47 |

| channelpro.co.uk | Future plc | 49 |

| homebuildingshow.co.uk | Future plc | 49 |

| installation-international.com | Future plc | 49 |

| mby.com | Future plc | 49 |

| yachtingmonthly.com | Future plc | 49 |

| mbr.co.uk | Future plc | 52 |

| myimperfectlife.com | Future plc | 52 |

| photographyshow.com | Future plc | 52 |

| gardeningetc.com | Future plc | 53 |

| avnetwork.com | Future plc | 54 |

| creativeplanetnetwork.com | Future plc | 54 |

| getcomputeractive.co.uk | Future plc | 54 |

| mobilechoiceuk.com | Future plc | 54 |

| mozo.com.au | Future plc | 54 |

| magazine.co.uk | Future plc | 55 |

| womanmagazine.co.uk | Future plc | 55 |

| advnture.com | Future plc | 56 |

| prosoundnetwork.com | Future plc | 56 |

| yachtingworld.com | Future plc | 56 |

| ybw.com | Future plc | 56 |

| cloudpro.co.uk | Future plc | 58 |

| pcgamingshow.com | Future plc | 58 |

| rugbyworld.com | Future plc | 58 |

| getprice.com.au | Future plc | 59 |

| radioworld.com | Future plc | 59 |

| tvbeurope.com | Future plc | 59 |

| livingetc.com | Future plc | 60 |

| golf-monthly.co.uk | Future plc | 61 |

| techlearning.com | Future plc | 61 |

| apcmag.com | Future plc | 62 |

| coachmag.co.uk | Future plc | 62 |

| mixonline.com | Future plc | 62 |

| future.swoogo.com | Future plc | 63 |

| homesandgardens.com | Future plc | 63 |

| myvouchercodes.co.uk | Future plc | 63 |

| guitarplayer.com | Future plc | 64 |

| countrylife.co.uk | Future plc | 65 |

| golfmonthly.com | Future plc | 65 |

| horseandhound.co.uk | Future plc | 66 |

| idealhome.co.uk | Future plc | 66 |

| moneyweek.com | Future plc | 66 |

| goodto.com | Future plc | 67 |

| myfavouritemagazines.co.uk | Future plc | 67 |

| womanandhome.com | Future plc | 67 |

| realhomes.com | Future plc | 68 |

| gocompare.com | Future plc | 69 |

| homebuilding.co.uk | Future plc | 69 |

| multichannel.com | Future plc | 72 |

| musicweek.com | Future plc | 72 |

| subscribe.pcpro.co.uk | Future plc | 73 |

| broadcastingcable.com | Future plc | 74 |

| twice.com | Future plc | 74 |

| tvtechnology.com | Future plc | 75 |

| howitworksdaily.com | Future plc | 76 |

| kiplinger.com | Future plc | 76 |

| magazinesdirect.com | Future plc | 77 |

| cyclingweekly.com | Future plc | 78 |

| musicradar.com | Future plc | 78 |

| nexttv.com | Future plc | 78 |

| decanter.com | Future plc | 79 |

| smartbrief.com | Future plc | 79 |

| whathifi.com | Future plc | 79 |

| guitarworld.com | Future plc | 80 |

| whattowatch.com | Future plc | 80 |

| digitalcameraworld.com | Future plc | 81 |

| shortlist.com | Future plc | 81 |

| gardeningknowhow.com | Future plc | 82 |

| theweek.co.uk | Future plc | 82 |

| cyclingnews.com | Future plc | 83 |

| itproportal.com | Future plc | 83 |

| technobuffalo.com | Future plc | 83 |

| toptenreviews.com | Future plc | 83 |

| wallpaper.com | Future plc | 83 |

| fourfourtwo.com | Future plc | 84 |

| whowhatwear.com | Future plc | 84 |

| laptopmag.com | Future plc | 85 |

| loudersound.com | Future plc | 85 |

| creativebloq.com | Future plc | 86 |

| itpro.co.uk | Future plc | 86 |

| marieclaire.co.uk | Future plc | 86 |

| t3.com | Future plc | 86 |

| whatculture.com | Future plc | 86 |

| cinemablend.com | Future plc | 87 |

| anandtech.com | Future plc | 89 |

| imore.com | Future plc | 89 |

| tomsguide.com | Future plc | 89 |

| gamesradar.com | Future plc | 90 |

| pcgamer.com | Future plc | 90 |

| androidcentral.com | Future plc | 91 |

| livescience.com | Future plc | 91 |

| space.com | Future plc | 91 |

| techradar.com | Future plc | 91 |

| tomshardware.com | Future plc | 91 |

| windowscentral.com | Future plc | 91 |

| becclesandbungayjournal.co.uk | Gannett | 41 |

| bordercountiesadvertizer.co.uk | Gannett | 46 |

| braintreeandwithamtimes.co.uk | Gannett | 55 |

| buckscountycouriertimes.com | Gannett | 69 |

| burnhamandhighbridgeweeklynews.co.uk | Gannett | 47 |

| clactonandfrintongazette.co.uk | Gannett | 54 |

| darlingtonandstocktontimes.co.uk | Gannett | 54 |

| harwichandmanningtreestandard.co.uk | Gannett | 46 |

| maldonandburnhamstandard.co.uk | Gannett | 51 |

| prestwichandwhitefieldguide.co.uk | Gannett | 40 |

| richmondandtwickenhamtimes.co.uk | Gannett | 59 |

| runcornandwidnesworld.co.uk | Gannett | 51 |

| swanageandwarehamvoice.co.uk | Gannett | 32 |

| thelancasterandmorecambec itizen.co.uk | Gannett | 1 |

| thetfordandbrandontimes.co.uk | Gannett | 38 |

| thetottenhamindependent.co.uk | Gannett | 50 |

| wattonandswaffhamtimes.co.uk | Gannett | 39 |

| wymondhamandattleboroughmercury.co.uk | Gannett | 38 |

| prime-magazine.co.uk | Gannett | 8 |

| eastkilbrideconnect.co.uk | Gannett | 14 |

| essex-media.co.uk | Gannett | 16 |

| businessiqnortheast.co.uk | Gannett | 17 |

| newforestpost.co.uk | Gannett | 17 |

| autoexchange.co.uk | Gannett | 18 |

| cincinatti.com | Gannett | 20 |

| troontimes.com | Gannett | 20 |

| wearevoice.co.uk | Gannett | 24 |

| dorsetbeaches.co.uk | Gannett | 31 |

| essexbusinessawards.co.uk | Gannett | 31 |

| northernfarmer.co.uk | Gannett | 32 |

| carrickherald.com | Gannett | 34 |

| living-magazines.co.uk | Gannett | 34 |

| peterboroughmatters.co.uk | Gannett | 35 |

| basildonstandard.co.uk | Gannett | 36 |

| fakenhamtimes.co.uk | Gannett | 37 |

| redhillandreigatelife.co.uk | Gannett | 37 |

| walesfarmer.co.uk | Gannett | 37 |

| forestryjournal.co.uk | Gannett | 39 |

| campaignseries.co.uk | Gannett | 40 |

| derehamtimes.co.uk | Gannett | 40 |

| localberkshire.co.uk | Gannett | 40 |

| wiltshirebusinessonline.co.uk | Gannett | 40 |

| cumnockchronicle.com | Gannett | 41 |

| dissmercury.co.uk | Gannett | 41 |

| droitwichadvertiser.co.uk | Gannett | 41 |

| yeovilexpress.co.uk | Gannett | 41 |

| ayradvertiser.com | Gannett | 42 |

| chorleycitizen.co.uk | Gannett | 42 |

| largsandmillportnews.com | Gannett | 42 |

| tewkesburyadmag.co.uk | Gannett | 42 |

| whitchurchherald.co.uk | Gannett | 42 |

| alloaadvertiser.com | Gannett | 43 |

| brentwoodlive.co.uk | Gannett | 43 |

| centralfifetimes.com | Gannett | 43 |

| midweekherald.co.uk | Gannett | 43 |

| romseyadvertiser.co.uk | Gannett | 43 |

| saffronwaldenreporter.co.uk | Gannett | 43 |

| dunmowbroadcast.co.uk | Gannett | 44 |

| hillingdontimes.co.uk | Gannett | 44 |

| ludlowadvertiser.co.uk | Gannett | 44 |

| thegardnernews.com | Gannett | 44 |

| ardrossanherald.com | Gannett | 45 |

| banburycake.co.uk | Gannett | 45 |

| dumbartonreporter.co.uk | Gannett | 45 |

| makeitgrateful.com | Gannett | 45 |

| milfordmercury.co.uk | Gannett | 45 |

| peeblesshirenews.com | Gannett | 45 |

| royston-crow.co.uk | Gannett | 45 |

| thisisoxfordshire.co.uk | Gannett | 45 |

| chelmsfordweeklynews.co.uk | Gannett | 46 |

| helensburghadvertiser.co.uk | Gannett | 46 |

| in-cumbria.com | Gannett | 46 |

| ledburyreporter.co.uk | Gannett | 46 |

| lowestoftjournal.co.uk | Gannett | 46 |

| theoldhamtimes.co.uk | Gannett | 46 |

| winsfordguardian.co.uk | Gannett | 46 |

| bicesteradvertiser.net | Gannett | 47 |

| chardandilminsternews.co.uk | Gannett | 47 |

| eppingforestguardian.co.uk | Gannett | 47 |

| freepressseries.co.uk | Gannett | 47 |

| southendstandard.co.uk | Gannett | 47 |

| southwestfarmer.co.uk | Gannett | 47 |

| windsorobserver.co.uk | Gannett | 47 |

| bordertelegraph.com | Gannett | 48 |

| bromsgroveadvertiser.co.uk | Gannett | 48 |

| cotswoldjournal.co.uk | Gannett | 48 |

| ealingtimes.co.uk | Gannett | 48 |

| halsteadgazette.co.uk | Gannett | 48 |

| northsomersettimes.co.uk | Gannett | 48 |

| northwalespioneer.co.uk | Gannett | 48 |

| wharfedaleobserver.co.uk | Gannett | 48 |

| whitehavennews.co.uk | Gannett | 48 |

| barrheadnews.com | Gannett | 49 |

| borehamwoodtimes.co.uk | Gannett | 49 |

| clydebankpost.co.uk | Gannett | 49 |

| denbighshirefreepress.co.uk | Gannett | 49 |

| hexham-courant.co.uk | Gannett | 49 |

| irvinetimes.com | Gannett | 49 |

| northwaleschronicle.co.uk | Gannett | 49 |

| penarthtimes.co.uk | Gannett | 49 |

| rhyljournal.co.uk | Gannett | 49 |

| sidmouthherald.co.uk | Gannett | 49 |

| starcourier.com | Gannett | 49 |

| thurrockgazette.co.uk | Gannett | 49 |

| bridportnews.co.uk | Gannett | 50 |

| gazetteherald.co.uk | Gannett | 50 |

| heraldseries.co.uk | Gannett | 50 |

| mcdonoughvoice.com | Gannett | 50 |

| northnorfolknews.co.uk | Gannett | 50 |

| pontiacdailyleader.com | Gannett | 50 |

| stalbansreview.co.uk | Gannett | 50 |

| tivysideadvertiser.co.uk | Gannett | 50 |

| witneygazette.co.uk | Gannett | 50 |

| elystandard.co.uk | Gannett | 51 |

| eveshamjournal.co.uk | Gannett | 51 |

| malverngazette.co.uk | Gannett | 51 |

| redditchadvertiser.co.uk | Gannett | 51 |

| southwalesguardian.co.uk | Gannett | 51 |

| thegleaner.com | Gannett | 51 |

| thisisthewestcountry.co.uk | Gannett | 51 |

| andoveradvertiser.co.uk | Gannett | 52 |

| bridgwatermercury.co.uk | Gannett | 52 |

| cantondailyledger.com | Gannett | 52 |

| cheboygannews.com | Gannett | 52 |

| daily-jeff.com | Gannett | 52 |

| eastlothiancourier.com | Gannett | 52 |

| exmouthjournal.co.uk | Gannett | 52 |

| halesowennews.co.uk | Gannett | 52 |

| ilkleygazette.co.uk | Gannett | 52 |

| messengernewspapers.co.uk | Gannett | 52 |

| reporter-times.com | Gannett | 52 |

| thescottishfarmer.co.uk | Gannett | 52 |

| wisbechstandard.co.uk | Gannett | 52 |

| barryanddistrictnews.co.uk | Gannett | 53 |

| cambstimes.co.uk | Gannett | 53 |

| countytimes.co.uk | Gannett | 53 |

| dudleynews.co.uk | Gannett | 53 |

| gazetteseries.co.uk | Gannett | 53 |

| hillsdale.net | Gannett | 53 |

| huntspost.co.uk | Gannett | 53 |

| impartialreporter.com | Gannett | 53 |

| knutsfordguardian.co.uk | Gannett | 53 |

| sloughobserver.co.uk | Gannett | 53 |

| sooeveningnews.com | Gannett | 53 |

| southernkitchen.com | Gannett | 53 |

| sturgisjournal.com | Gannett | 53 |

| timestelegram.com | Gannett | 53 |

| bracknellnews.co.uk | Gannett | 54 |

| ellwoodcityledger.com | Gannett | 54 |

| greatyarmouthmercury.co.uk | Gannett | 54 |

| harrowtimes.co.uk | Gannett | 54 |

| keighleynews.co.uk | Gannett | 54 |

| leighjournal.co.uk | Gannett | 54 |

| lincolncourier.com | Gannett | 54 |

| pekintimes.com | Gannett | 54 |

| thedailyreporter.com | Gannett | 54 |

| timesandstar.co.uk | Gannett | 54 |

| tmnews.com | Gannett | 54 |

| wandsworthguardian.co.uk | Gannett | 54 |

| asianimage.co.uk | Gannett | 55 |

| bucyrustelegraphforum.com | Gannett | 55 |

| chesterstandard.co.uk | Gannett | 55 |

| cravenherald.co.uk | Gannett | 55 |

| enfieldindependent.co.uk | Gannett | 55 |

| greenocktelegraph.co.uk | Gannett | 55 |

| kidderminstershuttle.co.uk | Gannett | 55 |

| stourbridgenews.co.uk | Gannett | 55 |

| the-review.com | Gannett | 55 |

| thisislancashire.co.uk | Gannett | 55 |

| times-gazette.com | Gannett | 55 |

| wiltsglosstandard.co.uk | Gannett | 55 |

| dailyworld.com | Gannett | 56 |

| dunfermlinepress.com | Gannett | 56 |

| kilburntimes.co.uk | Gannett | 56 |

| marshfieldnewsherald.com | Gannett | 56 |

| portclintonnewsherald.com | Gannett | 56 |

| record-courier.com | Gannett | 56 |

| wimbledonguardian.co.uk | Gannett | 56 |

| basingstokegazette.co.uk | Gannett | 57 |

| coshoctontribune.com | Gannett | 57 |

| dailyamerican.com | Gannett | 57 |

| eveningtribune.com | Gannett | 57 |

| falmouthpacket.co.uk | Gannett | 57 |

| hampshirechronicle.co.uk | Gannett | 57 |

| hertsad.co.uk | Gannett | 57 |

| journalstandard.com | Gannett | 57 |

| northwichguardian.co.uk | Gannett | 57 |

| oakridger.com | Gannett | 57 |

| pinkun.com | Gannett | 57 |

| stevenspointjournal.com | Gannett | 57 |

| sthelensstar.co.uk | Gannett | 57 |

| stroudnewsandjournal.co.uk | Gannett | 57 |

| the-leader.com | Gannett | 57 |

| thepublicopinion.com | Gannett | 57 |

| therecordherald.com | Gannett | 57 |

| whtimes.co.uk | Gannett | 57 |

| burytimes.co.uk | Gannett | 58 |

| hawkcentral.com | Gannett | 58 |

| lenconnect.com | Gannett | 58 |

| the-daily-record.com | Gannett | 58 |

| thecomet.net | Gannett | 58 |

| thenews-messenger.com | Gannett | 58 |

| thisiswiltshire.co.uk | Gannett | 58 |

| tricountyindependent.com | Gannett | 58 |

| westerntelegraph.co.uk | Gannett | 58 |

| wiltshiretimes.co.uk | Gannett | 58 |

| examiner-enterprise.com | Gannett | 59 |

| galesburg.com | Gannett | 59 |

| gazetteandherald.co.uk | Gannett | 59 |

| herefordtimes.com | Gannett | 59 |

| indeonline.com | Gannett | 59 |

| lancastereaglegazette.com | Gannett | 59 |

| leaderlive.co.uk | Gannett | 59 |

| newhamrecorder.co.uk | Gannett | 59 |

| newportri.com | Gannett | 59 |

| pal-item.com | Gannett | 59 |

| readingchronicle.co.uk | Gannett | 59 |

| salisburyjournal.co.uk | Gannett | 59 |

| times-series.co.uk | Gannett | 59 |

| wirralglobe.co.uk | Gannett | 59 |

| wisconsinrapidstribune.com | Gannett | 59 |

| bucksfreepress.co.uk | Gannett | 60 |

| dailycomet.com | Gannett | 60 |

| eastlondonadvertiser.co.uk | Gannett | 60 |

| eveningsun.com | Gannett | 60 |

| hackneygazette.co.uk | Gannett | 60 |

| salina.com | Gannett | 60 |

| somersetcountygazette.co.uk | Gannett | 60 |

| stargazette.com | Gannett | 60 |

| thewestonmercury.co.uk | Gannett | 60 |

| timesreporter.com | Gannett | 60 |

| barkinganddagenhampost.co.uk | Gannett | 61 |

| blueridgenow.com | Gannett | 61 |

| htrnews.com | Gannett | 61 |

| ilfordrecorder.co.uk | Gannett | 61 |

| livingstondaily.com | Gannett | 61 |

| mpnnow.com | Gannett | 61 |

| newarkadvocate.com | Gannett | 61 |

| newschief.com | Gannett | 61 |

| progress-index.com | Gannett | 61 |

| publicopiniononline.com | Gannett | 61 |

| sheboyganpress.com | Gannett | 61 |

| shelbystar.com | Gannett | 61 |

| surreycomet.co.uk | Gannett | 61 |

| thenorthwestern.com | Gannett | 61 |

| amestrib.com | Gannett | 62 |

| chillicothegazette.com | Gannett | 62 |

| countypress.co.uk | Gannett | 62 |

| dorsetecho.co.uk | Gannett | 62 |

| gadsdentimes.com | Gannett | 62 |

| gazette-news.co.uk | Gannett | 62 |

| milforddailynews.com | Gannett | 62 |

| newsleader.com | Gannett | 62 |

| petoskeynews.com | Gannett | 62 |

| swtimes.com | Gannett | 62 |

| theintell.com | Gannett | 62 |

| thetowntalk.com | Gannett | 62 |

| thisislocallondon.co.uk | Gannett | 62 |

| warringtonguardian.co.uk | Gannett | 62 |

| fdlreporter.com | Gannett | 63 |

| heraldnews.com | Gannett | 63 |

| heraldtimesonline.com | Gannett | 63 |

| hollandsentinel.com | Gannett | 63 |

| houmatoday.com | Gannett | 63 |

| marionstar.com | Gannett | 63 |

| monroenews.com | Gannett | 63 |

| nwemail.co.uk | Gannett | 63 |

| thedailyjournal.com | Gannett | 63 |

| aberdeennews.com | Gannett | 64 |

| islingtongazette.co.uk | Gannett | 64 |

| tauntongazette.com | Gannett | 64 |

| thewestmorlandgazette.co.uk | Gannett | 64 |

| timesonline.com | Gannett | 64 |

| watfordobserver.co.uk | Gannett | 64 |

| yourlocalguardian.co.uk | Gannett | 64 |

| amarillo.com | Gannett | 65 |

| columbiadailyherald.com | Gannett | 65 |

| dnj.com | Gannett | 65 |

| hattiesburgamerican.com | Gannett | 65 |

| ithacajournal.com | Gannett | 65 |

| mansfieldnewsjournal.com | Gannett | 65 |

| southwalesargus.co.uk | Gannett | 65 |

| burlingtoncountytimes.com | Gannett | 66 |

| fosters.com | Gannett | 66 |

| guardian-series.co.uk | Gannett | 66 |

| jacksonsun.com | Gannett | 66 |

| newsandstar.co.uk | Gannett | 66 |

| Recordnet.com | Gannett | 66 |

| swindonadvertiser.co.uk | Gannett | 66 |

| thestarpress.com | Gannett | 66 |

| thetelegraphandargus.co.uk | Gannett | 66 |

| uticaod.com | Gannett | 66 |

| worcesternews.co.uk | Gannett | 66 |

| augustachronicle.com | Gannett | 67 |

| capecodtimes.com | Gannett | 67 |

| columbiatribune.com | Gannett | 67 |

| dailyrecord.com | Gannett | 67 |

| hamhigh.co.uk | Gannett | 67 |

| ocala.com | Gannett | 67 |

| poconorecord.com | Gannett | 67 |

| reporternews.com | Gannett | 67 |

| shreveporttimes.com | Gannett | 67 |

| thenewsstar.com | Gannett | 67 |

| thetimesherald.com | Gannett | 67 |

| battlecreekenquirer.com | Gannett | 68 |

| goupstate.com | Gannett | 68 |

| patriotledger.com | Gannett | 68 |

| southcoasttoday.com | Gannett | 68 |

| theadvertiser.com | Gannett | 68 |

| thecalifornian.com | Gannett | 68 |

| zanesvilletimesrecorder.com | Gannett | 68 |

| argusleader.com | Gannett | 69 |

| eadt.co.uk | Gannett | 69 |

| independentmail.com | Gannett | 69 |

| ldnews.com | Gannett | 69 |

| newsherald.com | Gannett | 69 |

| pressconnects.com | Gannett | 69 |

| redding.com | Gannett | 69 |

| romfordrecorder.co.uk | Gannett | 69 |

| tuscaloosanews.com | Gannett | 69 |

| chieftain.com | Gannett | 70 |

| delmarvanow.com | Gannett | 70 |

| gastongazette.com | Gannett | 70 |

| lansingstatejournal.com | Gannett | 70 |

| metrowestdailynews.com | Gannett | 70 |

| news-journalonline.com | Gannett | 70 |

| nwfdailynews.com | Gannett | 70 |

| onlineathens.com | Gannett | 70 |

| theboltonnews.co.uk | Gannett | 70 |

| thespectrum.com | Gannett | 70 |

| vvdailypress.com | Gannett | 70 |

| courierpress.com | Gannett | 71 |

| dailycommercial.com | Gannett | 71 |

| enterprisenews.com | Gannett | 71 |

| heraldmailmedia.com | Gannett | 71 |

| hutchnews.com | Gannett | 71 |

| ipswichstar.co.uk | Gannett | 71 |

| kitsapsun.com | Gannett | 71 |

| njherald.com | Gannett | 71 |

| poughkeepsiejournal.com | Gannett | 71 |

| press-citizen.com | Gannett | 71 |

| registerguard.com | Gannett | 71 |

| timesrecordnews.com | Gannett | 71 |

| courierpostonline.com | Gannett | 72 |

| echo-news.co.uk | Gannett | 72 |

| jconline.com | Gannett | 72 |

| norwichbulletin.com | Gannett | 72 |

| rrstar.com | Gannett | 72 |

| sctimes.com | Gannett | 72 |

| staugustine.com | Gannett | 72 |

| theleafchronicle.com | Gannett | 72 |

| greatfallstribune.com | Gannett | 73 |

| RecordOnline.com | Gannett | 73 |

| starnewsonline.com | Gannett | 73 |

| cjonline.com | Gannett | 74 |

| eveningnews24.co.uk | Gannett | 74 |

| glasgowtimes.co.uk | Gannett | 74 |

| greenvilleonline.com | Gannett | 74 |

| montgomeryadvertiser.com | Gannett | 74 |

| newsshopper.co.uk | Gannett | 74 |

| palmbeachdailynews.com | Gannett | 74 |

| pjstar.com | Gannett | 74 |

| pnj.com | Gannett | 74 |

| seacoastonline.com | Gannett | 74 |

| southbendtribune.com | Gannett | 74 |

| theledger.com | Gannett | 74 |

| vcstar.com | Gannett | 74 |

| visaliatimesdelta.com | Gannett | 74 |

| gosanangelo.com | Gannett | 75 |

| rgj.com | Gannett | 75 |

| sj-r.com | Gannett | 75 |

| tcpalm.com | Gannett | 75 |

| thenorthernecho.co.uk | Gannett | 75 |

| burlingtonfreepress.com | Gannett | 76 |

| caller.com | Gannett | 76 |

| coloradoan.com | Gannett | 76 |

| dailyecho.co.uk | Gannett | 76 |

| lcsun-news.com | Gannett | 76 |

| savannahnow.com | Gannett | 76 |

| thenational.scot | Gannett | 76 |

| wausaudailyherald.com | Gannett | 76 |

| citizen-times.com | Gannett | 77 |

| elpasotimes.com | Gannett | 77 |

| fayobserver.com | Gannett | 77 |

| goerie.com | Gannett | 77 |

| lancashiretelegraph.co.uk | Gannett | 77 |

| postcrescent.com | Gannett | 77 |

| yorkpress.co.uk | Gannett | 77 |

| cantonrep.com | Gannett | 78 |

| edp24.co.uk | Gannett | 78 |

| floridatoday.com | Gannett | 78 |

| greenbaypressgazette.com | Gannett | 78 |

| mycentraljersey.com | Gannett | 78 |

| news-leader.com | Gannett | 78 |

| oxfordmail.co.uk | Gannett | 78 |

| providencejournal.com | Gannett | 78 |

| tallahassee.com | Gannett | 78 |

| commercialappeal.com | Gannett | 79 |

| democratandchronicle.com | Gannett | 79 |

| desertsun.com | Gannett | 79 |

| gainesville.com | Gannett | 79 |

| ydr.com | Gannett | 79 |

| beaconjournal.com | Gannett | 80 |

| heraldtribune.com | Gannett | 80 |

| jacksonville.com | Gannett | 80 |

| lohud.com | Gannett | 80 |

| lubbockonline.com | Gannett | 80 |

| news-press.com | Gannett | 80 |

| statesmanjournal.com | Gannett | 80 |

| app.com | Gannett | 81 |

| bournemouthecho.co.uk | Gannett | 81 |

| delawareonline.com | Gannett | 81 |

| dispatch.com | Gannett | 81 |

| naplesnews.com | Gannett | 81 |

| telegram.com | Gannett | 81 |

| theargus.co.uk | Gannett | 81 |

| clarionledger.com | Gannett | 82 |

| northjersey.com | Gannett | 82 |

| tucson.com | Gannett | 82 |

| courier-journal.com | Gannett | 83 |

| desmoinesregister.com | Gannett | 84 |

| indystar.com | Gannett | 84 |

| knoxnews.com | Gannett | 84 |

| oklahoman.com | Gannett | 84 |

| cincinnati.com | Gannett | 85 |

| palmbeachpost.com | Gannett | 85 |

| tennessean.com | Gannett | 85 |

| heraldscotland.com | Gannett | 86 |

| statesman.com | Gannett | 87 |

| jsonline.com | Gannett | 89 |

| azcentral.com | Gannett | 90 |

| freep.com | Gannett | 91 |

| usatoday.com | Gannett | 94 |

| crimeandinvestigationnetwork.com | Hearst | 1 |

| arcwcrew.com | Hearst | 17 |

| firstfinds.com | Hearst | 21 |

| mor-tv.com | Hearst | 39 |

| blaze.tv | Hearst | 41 |

| bigrapidsnews.com | Hearst | 43 |

| fdbhealth.com | Hearst | 47 |

| 98online.com | Hearst | 49 |

| manisteenews.com | Hearst | 49 |

| myjournalcourier.com | Hearst | 58 |

| fitchsolutions.com | Hearst | 59 |

| michigansthumb.com | Hearst | 60 |

| fyi.tv | Hearst | 61 |

| myplainview.com | Hearst | 61 |

| kingfeatures.com | Hearst | 62 |

| thetelegraph.com | Hearst | 63 |

| wptz.com | Hearst | 63 |

| mrt.com | Hearst | 64 |

| theintelligencer.com | Hearst | 65 |

| veranda.com | Hearst | 66 |

| bestproducts.com | Hearst | 68 |

| fitchratings.com | Hearst | 70 |

| abc-7.com | Hearst | 71 |

| wjcl.com | Hearst | 71 |

| wmtw.com | Hearst | 71 |

| weekand.com | Hearst | 72 |

| wgal.com | Hearst | 72 |

| wvtm13.com | Hearst | 72 |

| bicycling.com | Hearst | 73 |

| ksbw.com | Hearst | 73 |

| newstimes.com | Hearst | 73 |

| registercitizen.com | Hearst | 73 |

| thehour.com | Hearst | 73 |

| greenwichtime.com | Hearst | 74 |

| koat.com | Hearst | 74 |

| nhregister.com | Hearst | 74 |

| oprahdaily.com | Hearst | 74 |

| redbookmag.com | Hearst | 74 |

| wbal.com | Hearst | 74 |

| wlky.com | Hearst | 74 |

| wtae.com | Hearst | 74 |

| 4029tv.com | Hearst | 75 |

| hearstmags.com | Hearst | 75 |

| lmtonline.com | Hearst | 75 |

| middletownpress.com | Hearst | 75 |

| beaumontenterprise.com | Hearst | 76 |

| kcci.com | Hearst | 76 |

| nbc-2.com | Hearst | 76 |

| wapt.com | Hearst | 76 |

| ctpost.com | Hearst | 77 |

| ketv.com | Hearst | 77 |

| kmbc.com | Hearst | 77 |

| koco.com | Hearst | 77 |

| mynbc5.com | Hearst | 77 |

| stamfordadvocate.com | Hearst | 77 |

| wdsu.com | Hearst | 77 |

| wxii12.com | Hearst | 77 |

| wyff4.com | Hearst | 77 |

| expressnews.com | Hearst | 78 |

| kcra.com | Hearst | 78 |

| ourmidland.com | Hearst | 78 |

| wisn.com | Hearst | 78 |

| wmur.com | Hearst | 78 |

| housebeautiful.com | Hearst | 79 |

| wpbf.com | Hearst | 79 |

| elledecor.com | Hearst | 80 |

| thepioneerwoman.com | Hearst | 80 |

| wbaltv.com | Hearst | 80 |

| wesh.com | Hearst | 80 |

| wlwt.com | Hearst | 80 |

| roadandtrack.com | Hearst | 81 |

| womansday.com | Hearst | 81 |

| timesunion.com | Hearst | 82 |

| mysanantonio.com | Hearst | 83 |

| townandcountrymag.com | Hearst | 83 |

| wcvb.com | Hearst | 83 |

| countryliving.com | Hearst | 84 |

| houstonchronicle.com | Hearst | 84 |

| seattlepi.com | Hearst | 84 |

| seventeen.com | Hearst | 84 |

| autoweek.com | Hearst | 85 |

| caranddriver.com | Hearst | 85 |

| delish.com | Hearst | 85 |

| prevention.com | Hearst | 85 |

| runnersworld.com | Hearst | 86 |

| marieclaire.com | Hearst | 88 |

| popularmechanics.com | Hearst | 88 |

| sfchronicle.com | Hearst | 88 |

| womenshealthmag.com | Hearst | 88 |

| menshealth.com | Hearst | 89 |

| biography.com | Hearst | 90 |

| esquire.com | Hearst | 90 |

| goodhousekeeping.com | Hearst | 90 |

| harpersbazaar.com | Hearst | 90 |

| chron.com | Hearst | 91 |

| elle.com | Hearst | 91 |

| cosmopolitan.com | Hearst | 92 |

| sfgate.com | Hearst | 93 |

| myhammer.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 14 |

| askmediagroup.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 28 |

| bodynetwork.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 30 |

| celebwell.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 30 |

| craftjack.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 45 |

| vivian.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 45 |

| techboomers.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 50 |

| homestars.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 53 |

| woodmagazine.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 54 |

| allpeoplequilt.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 55 |

| mybuilder.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 58 |

| dailypaws.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 59 |

| magazines.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 59 |

| realage.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 60 |

| midwestliving.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 61 |

| mywedding.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 61 |

| travaux.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 62 |

| handy.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 65 |

| familycircle.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 66 |

| thebalancemoney.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 68 |

| agriculture.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 70 |

| thesprucecrafts.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 70 |

| verywellfamily.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 70 |

| thebalancesmb.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 71 |

| thesprucepets.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 71 |

| life.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 72 |

| mydomaine.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 72 |

| thebalancecareers.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 72 |

| fitnessmagazine.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 73 |

| bestlifeonline.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 75 |

| verywell.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 75 |

| thespruceeats.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 76 |

| tripsavvy.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 76 |

| angi.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 77 |

| cookinglight.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 77 |

| thespruce.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 77 |

| verywellfit.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 77 |

| homeadvisor.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 78 |

| byrdie.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 79 |

| care.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 79 |

| eatthis.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 79 |

| bhg.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 80 |

| verywellhealth.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 80 |

| eatingwell.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 81 |

| liquor.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 81 |

| peopleenespanol.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 81 |

| thebalance.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 82 |

| verywellmind.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 82 |

| shape.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 84 |

| southernliving.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 84 |

| treehugger.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 84 |

| brides.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 85 |

| parents.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 85 |

| foodandwine.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 86 |

| hellogiggles.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 86 |

| health.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 87 |

| simplyrecipes.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 87 |

| ask.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| instyle.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| lifewire.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| realsimple.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| seriouseats.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| travelandleisure.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 88 |

| marthastewart.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 91 |

| allrecipes.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 92 |

| ew.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 92 |

| investopedia.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 92 |

| thedailybeast.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 92 |

| thoughtco.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 92 |

| about.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 93 |

| people.com | IAC (Dotdash Meredith, Angi Inc., Search, and Emerging & Other divisions) | 93 |

| australiannewschannel.com.au | NewsCorp | 5 |

| fnlondon.com | NewsCorp | 1 |

| times.radio | NewsCorp | 16 |

| boxsetschannel.com.au | NewsCorp | 18 |

| tips.com.au | NewsCorp | 24 |

| foxclassics.com.au | NewsCorp | 26 |

| northshoretimes.com.au | NewsCorp | 27 |

| foxtelmovies.com.au | NewsCorp | 28 |

| thesundaymail.com.au | NewsCorp | 30 |

| aetv.com.au | NewsCorp | 33 |

| onebigswitch.com.au | NewsCorp | 33 |

| odds.com.au | NewsCorp | 35 |

| hubbl.com.au | NewsCorp | 39 |

| eurekareport.com.au | NewsCorp | 41 |

| foxshowcase.com.au | NewsCorp | 43 |

| foxnewsradio.com | NewsCorp | 44 |

| penews.com | NewsCorp | 46 |

| fncstatic.com | NewsCorp | 47 |

| mainevent.com.au | NewsCorp | 48 |

| punters.com.au | NewsCorp | 50 |

| binge.com.au | NewsCorp | 51 |

| newscorpaustralia.com | NewsCorp | 52 |

| racenet.com.au | NewsCorp | 52 |

| factiva.com | NewsCorp | 54 |

| kayosports.com.au | NewsCorp | 54 |

| bestrecipes.com.au | NewsCorp | 55 |

| hipages.com.au | NewsCorp | 55 |

| homelife.com.au | NewsCorp | 56 |

| lifestyle.com.au | NewsCorp | 56 |

| lifestylefood.com.au | NewsCorp | 56 |

| historychannel.com.au | NewsCorp | 57 |

| news.co.uk | NewsCorp | 59 |

| virginradio.co.uk | NewsCorp | 60 |

| delicious.com.au | NewsCorp | 63 |

| bodyandsoul.com.au | NewsCorp | 66 |

| move.com | NewsCorp | 66 |

| gq.com.au | NewsCorp | 67 |

| storyful.com | NewsCorp | 67 |

| talk.tv | NewsCorp | 68 |

| Kidspot.com.au | NewsCorp | 69 |

| the-tls.co.uk | NewsCorp | 71 |

| thesundaytimes.co.uk | NewsCorp | 72 |

| newscorp.com | NewsCorp | 74 |

| foxtel.com.au | NewsCorp | 75 |

| ntnews.com.au | NewsCorp | 75 |

| themercury.com.au | NewsCorp | 76 |

| realestate.com.au | NewsCorp | 77 |

| dowjones.com | NewsCorp | 78 |

| wsj.net | NewsCorp | 78 |

| adelaidenow.com.au | NewsCorp | 79 |

| vogue.com.au | NewsCorp | 79 |

| fox8.com | NewsCorp | 80 |

| mansionglobal.com | NewsCorp | 80 |

| foxsports.com.au | NewsCorp | 83 |

| harpercollins.com | NewsCorp | 83 |

| decider.com | NewsCorp | 85 |

| investors.com | NewsCorp | 85 |

| couriermail.com.au | NewsCorp | 86 |

| skynews.com.au | NewsCorp | 86 |

| talksport.com | NewsCorp | 88 |

| theaustralian.com.au | NewsCorp | 88 |

| barrons.com | NewsCorp | 89 |

| dailytelegraph.com.au | NewsCorp | 89 |

| foxsports.com | NewsCorp | 89 |

| heraldsun.com.au | NewsCorp | 89 |

| foxbusiness.com | NewsCorp | 90 |

| pagesix.com | NewsCorp | 90 |

| realtor.com | NewsCorp | 90 |

| marketwatch.com | NewsCorp | 92 |

| news.com.au | NewsCorp | 93 |

| nypost.com | NewsCorp | 93 |

| thetimes.co.uk | NewsCorp | 93 |

| foxnews.com | NewsCorp | 94 |

| thesun.co.uk | NewsCorp | 94 |

| wsj.com | NewsCorp | 94 |

| atxtv.co | Penske Media Corporation | 19 |

| atxtv.com | Penske Media Corporation | 26 |

| la3c.com | Penske Media Corporation | 29 |

| ampav.com | Penske Media Corporation | 40 |

| shemedia.com | Penske Media Corporation | 49 |

| luminatedata.com | Penske Media Corporation | 54 |

| dickclark.com | Penske Media Corporation | 57 |

| spy.com | Penske Media Corporation | 57 |

| lifeisbeautiful.com | Penske Media Corporation | 58 |

| theflowspace.com | Penske Media Corporation | 58 |

| streamys.org | Penske Media Corporation | 59 |

| acmcountry.com | Penske Media Corporation | 61 |

| sportico.com | Penske Media Corporation | 66 |

| goldenglobes.com | Penske Media Corporation | 71 |

| artforum.com | Penske Media Corporation | 72 |

| artnews.com | Penske Media Corporation | 74 |

| sourcingjournal.com | Penske Media Corporation | 74 |

| theamas.com | Penske Media Corporation | 75 |

| stylecaster.com | Penske Media Corporation | 78 |

| goldderby.com | Penske Media Corporation | 81 |

| vibe.com | Penske Media Corporation | 82 |

| robbreport.com | Penske Media Corporation | 83 |

| tvline.com | Penske Media Corporation | 85 |

| footwearnews.com | Penske Media Corporation | 86 |

| sheknows.com | Penske Media Corporation | 86 |

| sxsw.com | Penske Media Corporation | 87 |

| wwd.com | Penske Media Corporation | 87 |

| bgr.com | Penske Media Corporation | 90 |

| deadline.com | Penske Media Corporation | 91 |

| indiewire.com | Penske Media Corporation | 91 |

| billboard.com | Penske Media Corporation | 92 |

| rollingstone.com | Penske Media Corporation | 92 |

| hollywoodreporter.com | Penske Media Corporation | 93 |

| variety.com | Penske Media Corporation | 93 |

| bezzypsoriasis.com | Red Ventures | 13 |

| bezzycopd.com | Red Ventures | 23 |

| bezzypsa.com | Red Ventures | 23 |

| bezzymigraine.com | Red Ventures | 25 |

| bezzyibd.com | Red Ventures | 29 |

| plateapr.com | Red Ventures | 29 |

| bezzybc.com | Red Ventures | 32 |

| bezzyra.com | Red Ventures | 33 |

| bezzyt2d.com | Red Ventures | 33 |

| bezzyms.com | Red Ventures | 39 |

| bezzydepression.com | Red Ventures | 48 |

| redventures.com | Red Ventures | 57 |

| allconnect.com | Red Ventures | 61 |

| bestcolleges.com | Red Ventures | 64 |

| money.co.uk | Red Ventures | 65 |

| perks.optum.com | Red Ventures | 66 |

| store.optum.com | Red Ventures | 66 |

| confused.com | Red Ventures | 67 |

| creditcards.com | Red Ventures | 70 |

| healthgrades.com | Red Ventures | 70 |

| mymove.com | Red Ventures | 70 |

| uswitch.com | Red Ventures | 74 |

| bankrate.com | Red Ventures | 81 |

| greatist.com | Red Ventures | 81 |

| psychcentral.com | Red Ventures | 83 |

| thepointsguy.com | Red Ventures | 84 |

| healthline.com | Red Ventures | 91 |

| lonelyplanet.com | Red Ventures | 92 |

| medicalnewstoday.com | Red Ventures | 92 |

| nyt.net | The New York Times Company | 33 |

| wirecutter.com | The New York Times Company | 39 |

| newyorktimes.com | The New York Times Company | 54 |

| nyt.com | The New York Times Company | 77 |

| nyti.ms | The New York Times Company | 79 |

| nytco.com | The New York Times Company | 80 |

| thewirecutter.com | The New York Times Company | 84 |

| theathletic.com | The New York Times Company | 86 |

| nytimes.com | The New York Times Company | 95 |

| disneyenglish.com | The Walt Disney Company | 25 |

| dmdcentral.com | The Walt Disney Company | 25 |

| disneyabc.tv | The Walt Disney Company | 28 |

| disneylachaine.ca | The Walt Disney Company | 29 |

| nowtv.com.tr | The Walt Disney Company | 33 |

| patagonik.com.ar | The Walt Disney Company | 37 |

| abcspark.ca | The Walt Disney Company | 43 |

| disneystudioshelp.com | The Walt Disney Company | 45 |

| radiodisney.com | The Walt Disney Company | 45 |

| disney.pt | The Walt Disney Company | 46 |

| natgeomaps.com | The Walt Disney Company | 49 |

| hollywoodrecords.com | The Walt Disney Company | 50 |

| abcotvs.com | The Walt Disney Company | 51 |

| babytv.com | The Walt Disney Company | 53 |

| tamronhallshow.com | The Walt Disney Company | 53 |

| disneystar.com | The Walt Disney Company | 54 |

| disney.it | The Walt Disney Company | 58 |

| dgepress.com | The Walt Disney Company | 60 |

| livewithkellyandmark.com | The Walt Disney Company | 60 |

| telecine.com.br | The Walt Disney Company | 61 |

| disneystudios.com | The Walt Disney Company | 62 |

| natgeo.com | The Walt Disney Company | 63 |

| go.com | The Walt Disney Company | 64 |

| starplus.com | The Walt Disney Company | 64 |

| disneynow.com | The Walt Disney Company | 67 |

| clubpenguin.com | The Walt Disney Company | 68 |

| moviesanywhere.com | The Walt Disney Company | 68 |

| lucasfilm.com | The Walt Disney Company | 69 |

| searchlightpictures.com | The Walt Disney Company | 69 |

| disney.co.uk | The Walt Disney Company | 73 |

| tv.disney.es | The Walt Disney Company | 73 |

| abc30.com | The Walt Disney Company | 74 |

| freeform.com | The Walt Disney Company | 74 |

| fxnetworks.com | The Walt Disney Company | 78 |

| abcnews.com | The Walt Disney Company | 79 |

| 20thcenturystudios.com | The Walt Disney Company | 80 |

| disneyplus.com | The Walt Disney Company | 80 |

| hotstar.com | The Walt Disney Company | 80 |

| abc11.com | The Walt Disney Company | 81 |

| 6abc.com | The Walt Disney Company | 82 |

| abc7.com | The Walt Disney Company | 83 |

| foxmovies.com | The Walt Disney Company | 83 |

| abc13.com | The Walt Disney Company | 85 |

| abc7chicago.com | The Walt Disney Company | 85 |

| abc7news.com | The Walt Disney Company | 86 |

| abc7ny.com | The Walt Disney Company | 86 |

| marvel.com | The Walt Disney Company | 88 |

| abc.com | The Walt Disney Company | 92 |

| abcnews.go.com | The Walt Disney Company | 93 |

| disney.com | The Walt Disney Company | 93 |

| espn.com | The Walt Disney Company | 93 |

| nationalgeographic.com | The Walt Disney Company | 93 |

| radio.disney.com | The Walt Disney Company | 93 |

| washingtonposttv.com | The Washington Post | 1 |

| wapo.com | The Washington Post | 38 |

| washingtonpostlive.com | The Washington Post | 43 |

| arcxp.com | The Washington Post | 45 |

| washpost.com | The Washington Post | 53 |

| washingtonpost.com | The Washington Post | 94 |

| cornnation.com | Vox Media | 51 |

| onefootdown.com | Vox Media | 52 |

| rawcharge.com | Vox Media | 52 |

| redreporter.com | Vox Media | 53 |

| dukebasketballreport.com | Vox Media | 54 |

| letsgotribe.com | Vox Media | 54 |

| lonestarball.com | Vox Media | 54 |

| rollbamaroll.com | Vox Media | 54 |

| hammerandrails.com | Vox Media | 55 |

| stanleycupofchowder.com | Vox Media | 55 |

| vivaelbirdos.com | Vox Media | 55 |

| azdesertswarm.com | Vox Media | 56 |

| blueshirtbanter.com | Vox Media | 56 |

| broadstreethockey.com | Vox Media | 56 |

| crawfishboxes.com | Vox Media | 56 |

| denverstiffs.com | Vox Media | 56 |

| secondcityhockey.com | Vox Media | 56 |

| burntorangenation.com | Vox Media | 57 |

| pensburgh.com | Vox Media | 57 |

| federalbaseball.com | Vox Media | 58 |

| lookoutlanding.com | Vox Media | 58 |

| talkingchop.com | Vox Media | 58 |

| blessyouboys.com | Vox Media | 59 |

| athleticsnation.com | Vox Media | 60 |

| halosheaven.com | Vox Media | 60 |

| hogshaven.com | Vox Media | 60 |

| mavsmoneyball.com | Vox Media | 61 |

| overthemonster.com | Vox Media | 61 |

| tomahawknation.com | Vox Media | 61 |

| amazinavenue.com | Vox Media | 62 |

| aseaofblue.com | Vox Media | 62 |

| barcablaugranes.com | Vox Media | 62 |

| bleedcubbieblue.com | Vox Media | 62 |

| bucsnation.com | Vox Media | 62 |

| pinstripealley.com | Vox Media | 62 |

| poundingtherock.com | Vox Media | 62 |

| truebluela.com | Vox Media | 62 |

| bigcatcountry.com | Vox Media | 63 |

| beyondtheboxscore.com | Vox Media | 64 |

| maizenbrew.com | Vox Media | 64 |

| postingandtoasting.com | Vox Media | 64 |

| acmepackingcompany.com | Vox Media | 65 |

| bigblueview.com | Vox Media | 66 |

| royalsreview.com | Vox Media | 66 |

| silverandblackpride.com | Vox Media | 66 |

| arrowheadpride.com | Vox Media | 68 |

| blazersedge.com | Vox Media | 68 |

| bloggingtheboys.com | Vox Media | 68 |

| buffalorumblings.com | Vox Media | 68 |

| thephinsider.com | Vox Media | 68 |

| windycitygridiron.com | Vox Media | 68 |

| celticsblog.com | Vox Media | 69 |

| dailynorseman.com | Vox Media | 69 |

| bleedinggreennation.com | Vox Media | 70 |

| fieldgulls.com | Vox Media | 70 |

| goldenstateofmind.com | Vox Media | 70 |

| mmamania.com | Vox Media | 70 |

| prideofdetroit.com | Vox Media | 70 |

| badlefthook.com | Vox Media | 71 |

| behindthesteelcurtain.com | Vox Media | 71 |

| mccoveychronicles.com | Vox Media | 71 |

| milehighreport.com | Vox Media | 71 |

| patspulpit.com | Vox Media | 72 |

| silverscreenandroll.com | Vox Media | 72 |

| cincyjungle.com | Vox Media | 73 |

| netsdaily.com | Vox Media | 73 |

| nowmedianetwork.co | Vox Media | 74 |

| ninersnation.com | Vox Media | 75 |

| outsports.com | Vox Media | 76 |

| voxmedia.com | Vox Media | 76 |

| thedodo.com | Vox Media | 78 |

| cagesideseats.com | Vox Media | 79 |

| grubstreet.com | Vox Media | 79 |

| racked.com | Vox Media | 81 |

| bloodyelbow.com | Vox Media | 83 |

| mmafighting.com | Vox Media | 83 |

| theringer.com | Vox Media | 83 |

| eater.com | Vox Media | 85 |

| curbed.com | Vox Media | 87 |

| thecut.com | Vox Media | 88 |

| thrillist.com | Vox Media | 89 |

| vulture.com | Vox Media | 89 |

| polygon.com | Vox Media | 91 |

| popsugar.com | Vox Media | 91 |

| sbnation.com | Vox Media | 91 |

| nymag.com | Vox Media | 92 |

| vox.com | Vox Media | 92 |

| theverge.com | Vox Media | 93 |

| hogarhgtv.com | Warner Bros. Discovery | 10 |

| warnerbrosdiscovery.dk | Warner Bros. Discovery | 21 |

| onefifty.com | Warner Bros. Discovery | 26 |

| giallotv.it | Warner Bros. Discovery | 29 |

| pogo.tv | Warner Bros. Discovery | 36 |

| warnertv.de | Warner Bros. Discovery | 36 |

| tabichan.jp | Warner Bros. Discovery | 38 |

| mondotv.jp | Warner Bros. Discovery | 40 |

| realtime.it | Warner Bros. Discovery | 42 |

| boingtv.it | Warner Bros. Discovery | 45 |

| nove.tv | Warner Bros. Discovery | 45 |